Study Log (2019.12)

2019-12-31

- Reinforcement Learning

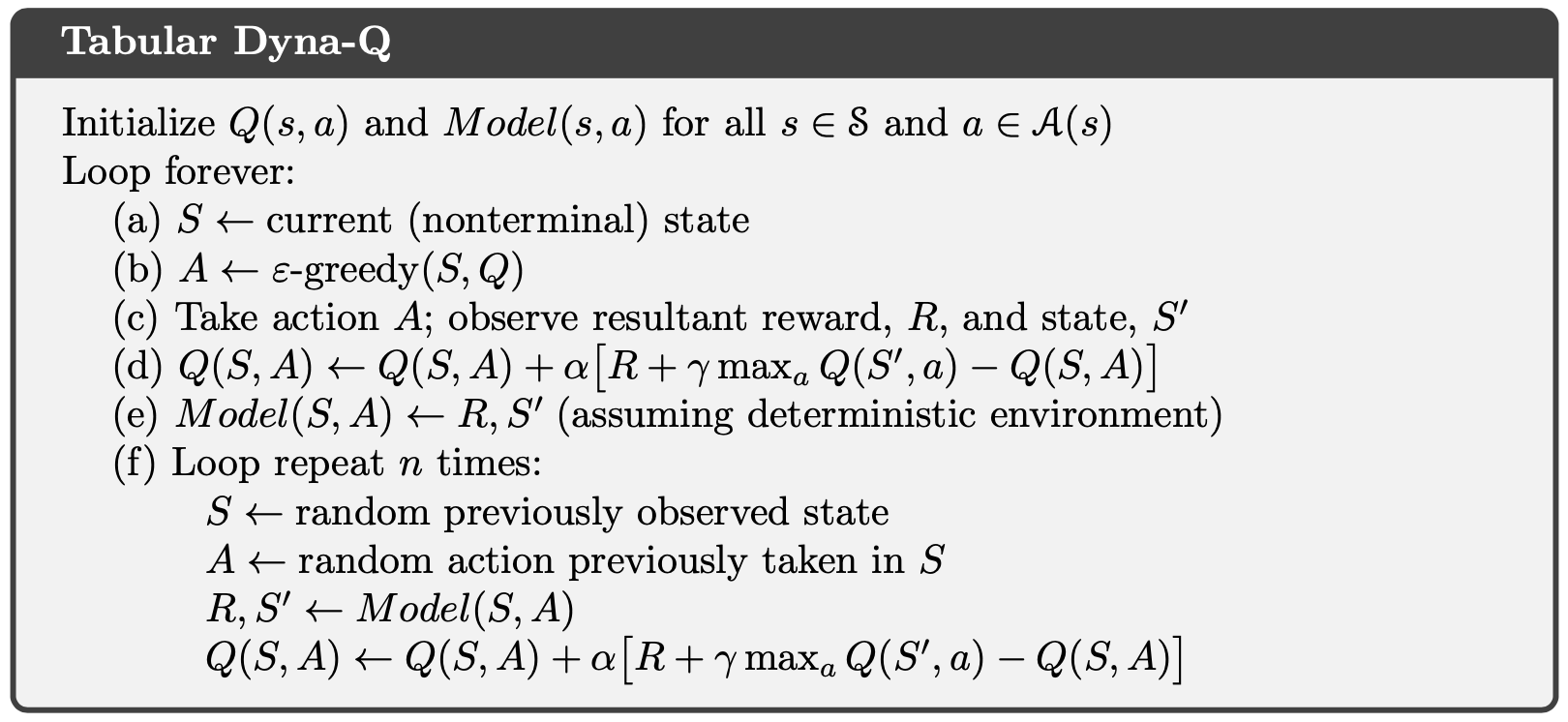

- Chapter 8. Planning and Learning with Tabular Methods

- 8.1 Models and Planning

- 8.2 Dyna: Integrated Planning, Acting, and Learning

- Page #166

- Chapter 8. Planning and Learning with Tabular Methods

2019-12-30

- Reinforcement Learning

- Chapter 7. n-step Bootstrapping

- 7.4 Per-decision Methods with Control Variates

- 7.5 Off-policy Learning Without Importance Sampling: The n-step Tree Backup Algorithm

- 7.6 A Unifying Algorithm: n-step Q($\sigma$)

- 7.7 Summary

- Chapter 8. Planning and Learning with Tabular Methods

- 8.1 Models and Planning

- Page #161

- Chapter 7. n-step Bootstrapping

- endtoendAI

2019-12-29

- Reinforcement Learning

- Chapter 7. n-step Bootstrapping

- 7.4 Per-decision Methods with Control Variates

- Page #151

- Chapter 7. n-step Bootstrapping

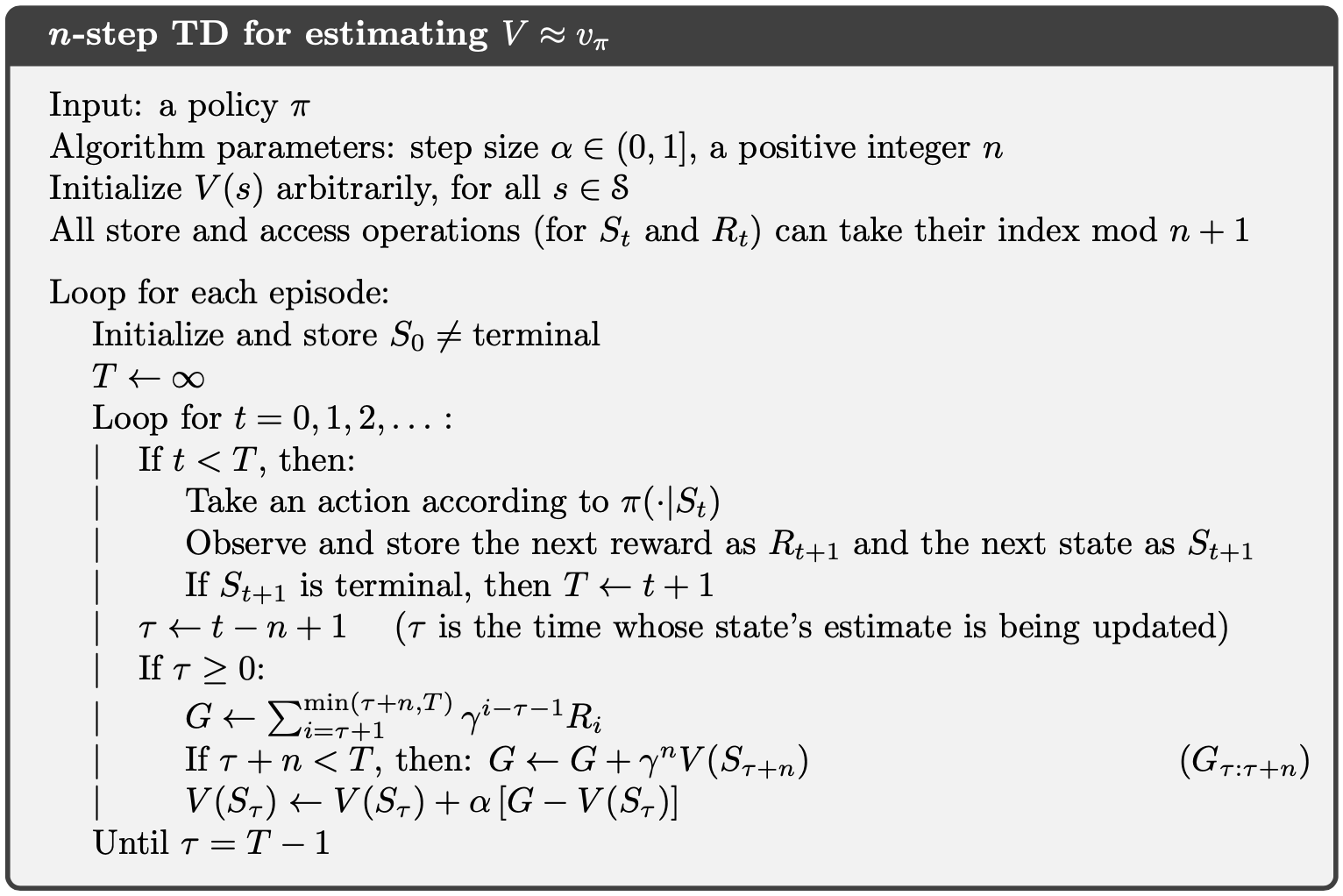

- N-step TD Method

- 모두를 위한 머신러닝/딥러닝 강의

2019-12-28

- Reinforcement Learning

- Chapter 7. n-step Bootstrapping

- 7.1 n-step TD Prediction

- 1) n-step까지 Discounted Reward 합계 G 계산 : $G \leftarrow \sum\nolimits_{i=\tau+1}^{min(\tau+n,T)} \gamma^{i-\tau-1} R_i$

- 2) n-step에서의 Value 계산 (n-step 이후의 Reward 함축) : $G \leftarrow G + \gamma^n V(\color{red}{ S_{\tau+n} })$

- 3) V 업데이트 : $V(S_\tau) \leftarrow V(S_\tau) + \alpha [G - V(\color{red}{ S_\tau })]$

- RandomWalk.py

- 1) n-step까지 Discounted Reward 합계 G 계산 : $G \leftarrow \sum\nolimits_{i=\tau+1}^{min(\tau+n,T)} \gamma^{i-\tau-1} R_i$

- 7.2 n-step Sarsa

- 7.3 n-step Off-policy Learning

- 7.4 Per-decision Methods with Control Variates

- 7.1 n-step TD Prediction

- Page #150

- Chapter 7. n-step Bootstrapping

2019-12-27

- Reinforcement Learning

- Chapter 6. Temporal-Difference Learning

- 6.6 Expected Sarsa

- 6.7 Maximization Bias and Double Learning

- 6.8 Games, Afterstates, and Other Special Cases

- 6.9 Summary

- Chapter 7. n-step Bootstrapping

- 7.1 n-step TD Prediction

- Page #143

- Chapter 6. Temporal-Difference Learning

- 모두를 위한 머신러닝/딥러닝 강의

- Lecture #33) lec10-4: 레고처럼 넷트웍 모듈을 마음껏 쌓아 보자

- Lecture #34

- Lecture #35

- Lecture #36

- Lecture #37

- Lecture #38

- Lecture #39

2019-12-25

- Reinforcement Learning

- Chapter 6. Temporal-Difference Learning

- 6.1 TD Prediction

- 6.2 Advantages of TD Prediction Methods

- 6.3 Optimality of TD(0)

- 6.4 Sarsa: On-policy TD Control

- 6.5 Q-learning: Off-policy TD Control

- Page #133

- Chapter 6. Temporal-Difference Learning

2019-12-24

- SanghyukChun’s Blog

- Reinforcement Learning

- Chapter 5. Monte Carlo Methods

- 5.7 Off-policy Monte Carlo Control

- 5.8 Discounting-aware Importance Sampling

- 5.9 Per-decision Importance Sampling

- 5.10 Summary

- Page #119

- Chapter 5. Monte Carlo Methods

2019-12-23

2019-12-22

- SanghyukChun’s Blog

- Machine Learning 스터디 (5) Decision Theory

- Machine learning 스터디 (6) Information Theory

- Machine learning 스터디 (7) Convex Optimization

- Machine learning 스터디 (8) Classification Introduction (Decision Tree, Naïve Bayes, KNN)

- Machine learning 스터디 (13) Clustering (K-means, Gaussian Mixture Model)

- Machine Learning 스터디 (14) EM Algorithm

- Machine Learning 스터디 (16) Dimensionality Reduction (PCA, LDA)

- 모두를 위한 머신러닝/딥러닝 강의

- Lecture #31) lec10-2: Weight 초기화 잘해보자

- Lecture #32

2019-12-21

2019-12-20

- 숨니의 무작정 따라하기

- [Ch.8] Value Function Approximation

- [Ch.9] DQN(Deep Q-Networks)

- [Part 0] Q-Learning with Tables and Neural Networks

- [Part 1] Multi-armed Bandit

- [Part 1.5] Contextual Bandits

- [Part 2] Policy-based Agents(Cart-Pole Problem)

- [Part 3] Model-based RL

- [Part 4] Deep Q-Networks and Beyond

- [Part 5] Visualizing an Agent’s Thoughts and Actions

- [Part 6] Partial Observability and Deep Recurrent Q-Networks

2019-12-19

2019-12-17

2019-12-16

2019-12-15

- 강화학습 기초부터 DQN까지

- Page #143

- 숨니의 무작정 따라하기

2019-12-14

- Reinforcement Learning

- Chapter 5. Monte Carlo Methods

- 5.5 Off-policy Prediction via Importance Sampling

- 5.6 Incremental Implementation

- Page #110

- Chapter 5. Monte Carlo Methods

- 강화학습 기초부터 DQN까지

- Page #90

2019-12-10

- Reinforcement Learning

- Chapter 4. Dynamic Programming

- 4.2 Policy Improvement

- 4.3 Policy Iteration

- 4.4 Value Iteration

- 4.5 Asynchronous Dynamic Programming

- 4.6 Generalized Policy Iteration

- 4.7 Efficiency of Dynamic Programming

- 4.8 Summary

- Chapter 5. Monte Carlo Methods

- 5.1 Monte Carlo Prediction

- 5.2 Monte Carlo Estimation of Action Values

- 5.3 Monte Carlo Control

- 5.4 Monte Carlo Control without Exploring Starts

- 5.5 Off-policy Prediction via Importance Sampling

- Page #104

- Chapter 4. Dynamic Programming

2019-12-04

- Reinforcement Learning

- Chapter 3. Finite Markov Decision Processes

- 3.1 The Agent–Environment Interface

- 3.2 Goals and Rewards

- 3.3 Returns and Episodes

- 3.4 Unified Notation for Episodic and Continuing Tasks

- 3.5 Policies and Value Functions

- 3.6 Optimal Policies and Optimal Value Functions

- 3.7 Optimality and Approximation

- 3.8 Summary

- Chapter 4. Dynamic Programming

- 4.1 Policy Evaluation (Prediction)

- 4.2 Policy Improvement

- Page #79

- Chapter 3. Finite Markov Decision Processes

2019-12-03

- Reinforcement Learning

- Chapter 2. Multi-armed Bandits

- 2.7 Upper-Confidence-Bound Action Selection

- 2.8 Gradient Bandit Algorithms

- 2.9 Associative Search (Contextual Bandits)

- 2.10 Summary

- Page #47

- Chapter 2. Multi-armed Bandits

2019-12-02

- 모두를 위한 머신러닝/딥러닝 강의

- Reinforcement Learning

- Chapter 1. Introduction

- 1.4 Limitations and Scope

- 1.5 An Extended Example: Tic-Tac-Toe

- 1.6 Summary

- Chapter 2. Multi-armed Bandits

- 2.1 A k-armed Bandit Problem

- 2.2 Action-value Methods

- 2.3 The 10-armed Testbed

- 2.4 Incremental Implementation

- 2.5 Tracking a Nonstationary Problem

- 2.6 Optimistic Initial Values

- Page #35

- Chapter 1. Introduction

2019-12-01

- Fundamental of Reinforcement Learning

- Chapter 4. Dynamic Programming

- Chapter 5. Monte-Carlo Methods

- 모두를 위한 머신러닝/딥러닝 강의

- Lecture #25) lec9-1: XOR 문제 딥러닝으로 풀기

- Lecture #26

- Lecture #27

- Lecture #28

- Lecture #29

- Reinforcement Learning

- Chapter 1. Introduction

- 1.1 Reinforcement Learning

- 1.2 Examples

- 1.3 Elements of Reinforcement Learning

- Page #7

- Chapter 1. Introduction

2019-11-30

- 모두를 위한 머신러닝/딥러닝 강의

- Lecture #14) ML lec 6-1 - Softmax Regression: 기본 개념 소개

- Lecture #15

- Lecture #16

- Lecture #17

- Lecture #18

- Lecture #19

- Lecture #20

- Lecture #21

- Lecture #22

- Lecture #23

- Lecture #24

- 파이썬과 케라스로 배우는 강화학습

-

- 강화학습 심화 3: 아타리

-

2019-11-29

- 강화학습 관련 자료

- Fundamental of Reinforcement Learning

- Chapter 1. Introduction

- Chapter 2. Markov Decision Process

- Chapter 3. Bellman Equation

Comments